The Tommyknocker Developer

Stephen King Accidentally Predicted What AI Would Do to Developers

Stephen King Accidentally Predicted What AI Would Do to Developers

"As I say, we've never been very good understanders. We're not a race of super-Einsteins. Thomas Edison in space would be closer, I think."

-- Bobbi Anderson, The Tommyknockers (Stephen King, 1987)

Stephen King wrote that nearly forty years ago. He was describing aliens.

In the novel, a buried spacecraft in a small Maine town called Haven emits an invisible influence that transforms the residents. They become wildly inventive -- building gadgets out of kitchen appliances, batteries, and spare parts. Teleportation devices. Mind-control machines. Weapons that shouldn't work but do. The transformation grants what King calls "a limited form of genius which makes them very inventive but does not provide any philosophical or ethical insight into their inventions." They build without understanding; they produce without comprehending. Edison in space, not Einstein.

The residents of Haven don't know why their inventions work. They don't care. The capability is intoxicating; the understanding feels unnecessary. Until it isn't.

King meant it as horror. He was writing about substance abuse, about what happens when power arrives without the wisdom to wield it. He didn't know he was writing about software development in 2025.

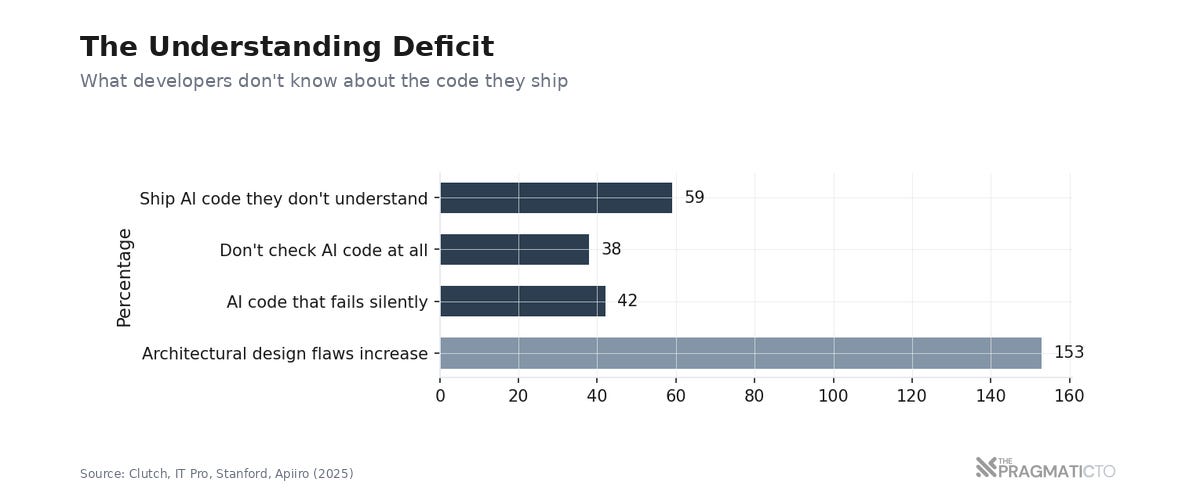

That trajectory is running across knowledge work right now -- and software is where it's most visible. Fifty-nine percent of developers ship AI-generated code they don't fully understand. Forty-two percent of AI-produced code fails silently -- it runs, passes basic checks, and produces wrong results. These aren't quality metrics. They're symptoms of an understanding deficit.

Developers are the canary. They're not the only ones breathing the gas.

Lawyers are submitting AI-generated briefs with hallucinated citations they can't verify -- 486 tracked cases worldwide and accelerating. Consultants deliver AI-generated strategy decks their junior analysts can't defend when clients push back. Endoscopists who used AI assistance for three months saw their diagnostic accuracy drop from 28.4% to 22.4% when the AI was removed. The tools work. The understanding doesn't follow.

This is not an argument against AI tools. I use them. This is an argument about what we trade when we optimize for capability over comprehension -- and why that trade is more dangerous than most CTOs realize.

The Capability Explosion

The capability is real, and dismissing it destroys credibility.

GitHub's Octoverse data shows Copilot generating 46% of code for its users. Apiiro's study across 7,000 developers and 62,000 repositories measured a 4x velocity increase. A quarter of Y Combinator's W25 batch built codebases that are 95% AI-generated. Two years ago, these numbers would have sounded absurd; today they're table stakes for any team shipping software.

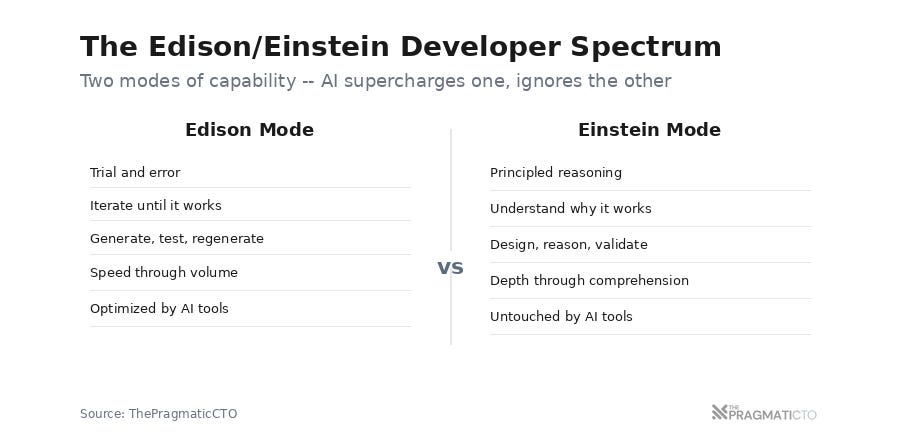

The genuine use cases are obvious to anyone who's used the tools honestly. Prototyping. Boilerplate. Exploring unfamiliar APIs. Getting a first draft of something you'll reshape. AI tools excel at what I'll call the Edison mode of development: generate, test, iterate, generate again. Trial and error at machine speed. For some problems, this works spectacularly.

At LiORA, AI tools are part of the workflow. Pretending otherwise would be dishonest; pretending the productivity gains aren't real would be worse. The tools accelerate meaningful work.

And yet.

Capability and understanding are different things. You can build a house without understanding load-bearing walls. You can wire electrical without understanding current flow. The house stands; the wiring works. Until the day you need to add a second floor, or troubleshoot why a breaker keeps tripping, or explain to an inspector why the junction box is where it is. Capability gets you the building. Understanding lets you maintain it.

The question isn't whether AI tools make developers faster. They do. The question is what happens to the understanding that used to come bundled with the building. Before AI, producing code and understanding it were the same activity. You wrote it; you understood it by writing it. AI decouples those two things for the first time; you can produce without understanding. That decoupling is the story.

The Understanding Deficit

The numbers paint a specific picture.

Fifty-nine percent of developers use AI-generated code they don't fully understand. Thirty-eight percent don't review it at all -- because reviewing AI code takes longer than reviewing a colleague's work, and the whole point was speed. Meanwhile, architectural design flaws have spiked 153% and privilege escalation paths have jumped 322%. Architecture planning shows the lowest AI adoption of any development activity at 17.8% -- even teams that trust AI for everything else won't trust it for design decisions.

Addy Osmani calls this the 70% problem. AI tools get you 70% of the way to a complete solution -- fast. The last 30% is edge cases, architecture, security, maintainability; the parts that require genuine understanding of the system you're building. If you never developed that understanding -- if you skipped straight to the 70% output -- you can't finish the last 30%. You're stuck. Senior developers use AI to accelerate what they already understand; juniors use it to skip the fundamentals that would build that understanding. Same tool, opposite trajectories.

The pattern extends well beyond code.

In 2023, a lawyer named Steven Schwartz submitted a federal court brief in Mata v. Avianca citing six fabricated cases generated by ChatGPT. He was sanctioned $5,000. That incident was the visible tip; Damien Charlotin's database now tracks 486 AI hallucination cases worldwide, with 128 lawyers and 2 judges filing documents containing fabricated citations. The rate has accelerated from two cases per week to two or three per day. Lawyers are becoming Edisons -- producing briefs they can't verify because they don't hold the legal reasoning that would let them spot a hallucination.

A Lancet study on endoscopists found that after just three months of AI-assisted colonoscopy, doctors' detection rates for precancerous lesions dropped from 28.4% to 22.4% when the AI was removed. Three months. The skill atrophied that fast.

The consulting world is on the same trajectory. Firms are delivering AI-generated strategy decks that look polished until clients push back on the reasoning. Junior analysts who assembled the deck with AI prompts can't defend the recommendations because they never did the analysis that would let them. The consultant who can't reason beyond the deck is the developer who can't debug beyond the prompt. Different profession; same gap.

The understanding deficit isn't a software problem. It's a knowledge worker problem; software is where the consequences compile.

Peter Naur saw this coming in 1985. In his essay "Programming as Theory Building," he argued that software is fundamentally a theory held in the minds of its builders. The code is a lossy representation of that theory -- it captures the what but not the why. Decisions about architecture, trade-offs, constraints; that knowledge lives in people, not in files. Changes made by people who don't possess the theory, Naur wrote, "add to the program's decay, until it is unmaintainable." He was describing what happens when a team inherits code nobody understands. AI-assisted development creates that scenario at industrial scale: the original author is a model with no memory of what it produced, no theory of the program, no understanding to transfer. Naur predicted this exact failure mode forty years before it arrived. We're building companies on it.

The Edison Trap

Edison's method was empirical. Try everything. Test ten thousand filaments for the lightbulb. "I have not failed," he reportedly said; "I've found 10,000 ways that won't work." It's a legitimate approach -- iteration at volume, learning through trial and error, converging on solutions through sheer persistence.

Einstein's method was theoretical. Thought experiments. Principled reasoning about the nature of the universe. Fewer attempts; deeper insight per attempt. E=mc^2 didn't emerge from testing ten thousand equations. It emerged from understanding the relationship between mass and energy at a fundamental level. When Einstein got something right, he knew why it was right -- and could extend the insight to problems he hadn't yet encountered.

Both approaches produce results. They solve different problems.

Edison works when iteration is cheap and failure is recoverable. Prototype a new feature; try three approaches; ship the one that passes tests. This is how most developers interact with AI tools today. Generate code, run it, iterate on the errors, regenerate. Four times the velocity. The Edison approach at machine speed. For prototyping and exploration, it's transformative.

Einstein is necessary when you need to understand why something works -- because you'll need to maintain it, extend it, debug it under pressure at 2 AM, or explain to an auditor why a security decision was made. Understanding the system, not just producing the system. AI tools do nothing for this mode. Comprehension doesn't come from generating more code; it comes from reasoning about the code's relationship to the problem it solves.

The trap is when Edison mode is all you have.

If your development process is entirely Edisonian -- generate, test, ship, repeat -- you can build things you can't maintain. You produce systems nobody understands. The prototype works; production demands something the prototype can't provide. Simon Willison draws the line clearly: AI-assisted coding means using the tools while maintaining review, testing, and comprehension standards. Vibe coding means building software without reviewing the code at all. The distinction isn't about the tools; it's about whether understanding survives the process.

The boundary between prototype and production is where the Tommyknocker developer gets stuck. Edison mode got them to 70%. Einstein mode -- the mode AI doesn't accelerate -- is required for the remaining 30%. And if you never built the understanding, you can't switch modes. You're stuck at 70% with no way forward. A team of Edisons can build fast; a team of Edisons can't maintain what they built when the requirements shift and the architecture needs to evolve.

The Haven Trajectory

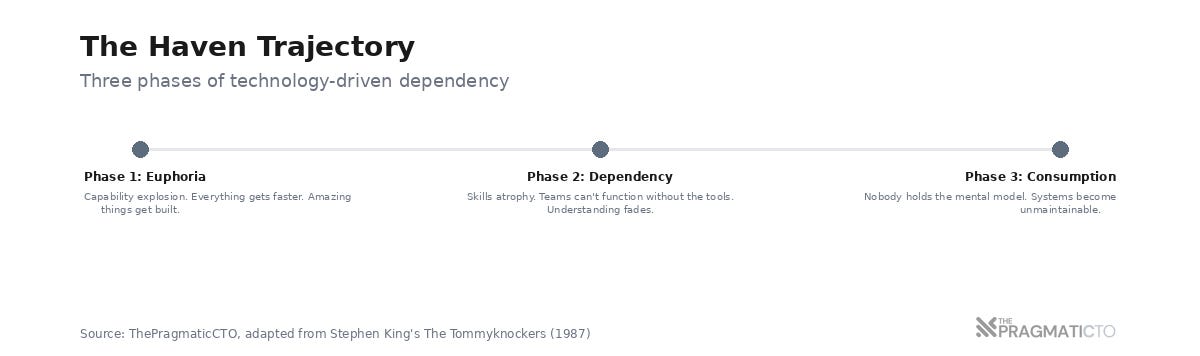

In King's novel, Haven's transformation follows three phases. Phase one is euphoria: capability explodes, amazing things get built, the town is electric with possibility. Phase two is dependency: the residents can't function without the ship's influence, old skills atrophy, the technology becomes load-bearing. Phase three is consumption: the transformation goes too far, and the technology destroys the people who depended on it.

I'm not predicting Phase 3. I'm observing Phase 2. And Phase 2 has plenty of precedent on its own.

The pattern has played out before in other domains. Greater GPS use correlates with worse spatial memory -- GPS users travel longer distances, take more time, and develop weaker cognitive maps than people who navigate with paper maps. Pilots can lose manual flying skills in as little as two months without practice; when Air France 447's automation failed in 2009, the crew's manual flying errors killed 228 people. The automation paradox: the more capable the automated system, the less capable the human operator becomes, and the more catastrophic the failure when automation breaks down.

The same pattern is running across knowledge work simultaneously, and the evidence is mounting. Microsoft's 2025 Future of Work report found that knowledge workers using AI were ceding problem-solving expertise to the system -- focusing on gathering and integrating AI responses rather than reasoning independently. The endoscopist study showed skill erosion in three months. GPS degrades spatial memory over years. Pilots lose manual skills in two months. The timelines are compressing.

There's a critical difference in failure modes across these domains. GPS fails obviously -- you're lost. Autopilot fails dramatically -- people die. AI-generated work fails silently. The hallucinated legal brief looks like a real brief. The AI-generated strategy deck looks like a real strategy deck. The vibe-coded service passes its tests. Forty-two percent silent failure rate. The system works until it doesn't; when it doesn't, nobody knows why because nobody understood how it worked in the first place. The silence is the danger; you don't know the understanding is gone until the moment you need it.

The erosion is invisible until the technology fails. That's the Haven trajectory. And unlike King's novel, there's no Jim Gardener with a steel plate in his head to launch the ship into space when things go wrong.

What I'm Doing

At LiORA, we're in the conservative lane. The team uses AI for code reviews, research, and compiling content -- not for code generation. We tried AI code gen; the output was sloppy for our context. AI accelerates the research and review work -- Edison-compatible tasks -- but it hasn't earned trust for the building itself.

On personal projects -- ShipLog.ca, StructPR.dev -- I'm running the aggressive lane. Agentic coding workflows with early success, but that success required extensive guardrails. Detailed context files -- CLAUDE.md, architectural constraints, coding standards -- fed to the agent before it writes a single line. The agent needs the theory of the program before it can contribute to it. Naur's insight, operationalized.

The next experiment: test and review gates outside the agent's scope. A subset of tests the AI can't see or modify, but must pass. The agent proves understanding by surviving checks it can't game. The personal projects are the sandbox; the team gets the lessons after they're proven.

The bet is that guardrails are the Einstein layer. You don't need every developer to hold the full theory of the program if the system enforces understanding at the gates. Whether that bet holds is something I'm still figuring out. The two-lane approach -- conservative at LiORA, aggressive in personal R&D -- is how I'm testing the boundary without putting the team at risk.

Questions for CTOs

How many engineers on your team can explain why the code they shipped last sprint works the way it does -- not what it does, but why it was designed that way?

If your AI tools stopped working tomorrow, could your team maintain the systems they've built? How long before the understanding gap becomes a maintenance crisis?

When was the last time a developer on your team traced a production issue to root cause without AI assistance?

Are you hiring for understanding or for velocity? AI can provide velocity. Understanding is the human part.

The trajectory isn't unique to your engineering team. Every knowledge function in your organization -- legal, strategy, analytics -- is breathing the same gas. The engineering org is where you'll see it first; it won't be where it stops.

The Tommyknockers didn't know what they were building or why it worked. They just knew it was powerful. Haven paid the price for the gap between capability and comprehension.

The question for every CTO -- for every leader of knowledge workers -- is whether your team is building understanding, or just building.