OpenAI Didn't Buy a Product. They Bought a Distribution Channel.

The Token Economics Behind the OpenClaw Acqui-Hire

The Token Economics Behind the OpenClaw Acqui-Hire

On February 15, 2026, Sam Altman announced on X that OpenClaw creator Peter Steinberger was joining OpenAI. He called Steinberger "a genius with a lot of amazing ideas about the future of very smart agents interacting with each other to do very useful things for people." The framing was deliberate---talent, vision, the future of personal agents. Every analysis published since has dutifully followed the same narrative; OpenAI acquired a brilliant founder, absorbed the most viral open-source project in GitHub history, and positioned itself to dominate the agent layer.

That narrative isn't wrong. But it's incomplete in a way that matters.

Buried beneath the talent story is a financial reality that almost nobody is discussing. OpenClaw's default provider hierarchy places Anthropic first---above OpenAI, above Google, above every other model provider. The default primary model is `anthropic/claude-opus-4-6`. Steinberger himself recommended Claude Opus 4.6 for heavy agent workloads; community guides consistently called Claude Sonnet 4.5 the "sweet spot" for most users; independent benchmarks found that Claude outperformed GPT-4o on long-context tasks, prompt-injection resistance, and multi-step tool use---the exact capabilities autonomous agents need most. One industry analyst put it bluntly: "OpenClaw was one of the biggest drivers of paying API traffic to Anthropic, since most users ran it on Claude."

OpenAI didn't just buy a genius. I believe they bought a distribution channel that was sending a competitor's revenue through the roof---and they are about to redirected it.

The Token Furnace

Understanding why this acquisition makes financial sense requires understanding how much money autonomous agents burn. OpenClaw isn't a chatbot; it's a 24/7 autonomous system that connects to your email, calendar, messaging platforms, and web browser, chaining multi-step workflows together with persistent memory across sessions. Every one of those operations consumes API tokens; the architecture ensures that consumption is extraordinary.

Six factors make OpenClaw a token furnace. Context accumulation accounts for 40-50% of total spend, because the entire conversation history is resent with every API call; sessions with roughly 35 messages had grown to 2.9 megabytes in one documented case, occupying 56-58% of a 400,000-token context window. Tool outputs from shell commands, file reads, and web fetches deposit thousands of additional tokens into that context; OpenClaw's system prompt---5,000 to 10,000 tokens---ships with every single API call regardless of whether the user is asking a complex question or checking whether any tasks exist. And the default "heartbeat" check runs every thirty minutes, sending the entire 120,000-token context window to the API for what amounts to a status ping. At Opus pricing, that heartbeat alone costs approximately $0.75 per check---roughly $250 per week for an agent that mostly reports nothing.

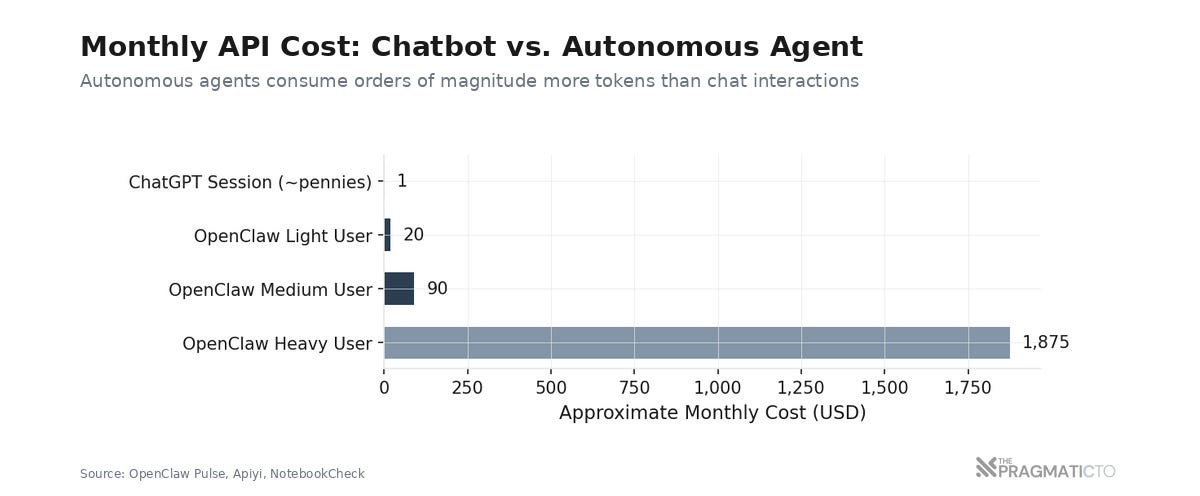

The per-user costs that result from this architecture are unlike anything the chatbot era prepared us for. Light users consuming 5-20 daily messages spend $10-30 per month on Claude Sonnet; medium users running automated workflows and cron jobs land between $30 and $150; heavy users operating 24/7 assistants with browser automation can reach $750 to $3,000 or more per month on Opus-tier models. The extreme documented cases are worse still. Federico Viticci, the tech blogger, burned through $3,600 in a single month; a German publication hit $100 in a single day of testing; one Moltbook user watched $8 disappear every thirty minutes---$380 per day---just processing new social posts.

Compare that to a ChatGPT conversation, which might consume a few thousand tokens per session at pennies per interaction. The gap between chatbot-era economics and agent-era economics is not incremental; it is orders of magnitude.

Follow the Money

Those tokens are revenue for someone; the question that matters---the one that reframes the entire acquisition---is who.

OpenClaw is model-agnostic by design; users can configure any provider through their own API keys. But defaults drive behavior, and OpenClaw's defaults overwhelmingly favor Anthropic. The provider priority hierarchy in the official documentation reads Anthropic first, then OpenAI, then OpenRouter, followed by Gemini and a long tail of smaller providers; when a user configures an Anthropic API key, Claude models are automatically set as primary. The original project was named "Clawdbot"---a phonetic play on Claude---and the community that coalesced around it adopted Claude as the consensus recommendation for agent workloads. Claude's advantages in long-context reasoning, prompt-injection resistance, and multi-step tool use mapped precisely to what autonomous agents demand most; even users who started with OpenAI keys often migrated to Anthropic after community forums pointed them there.

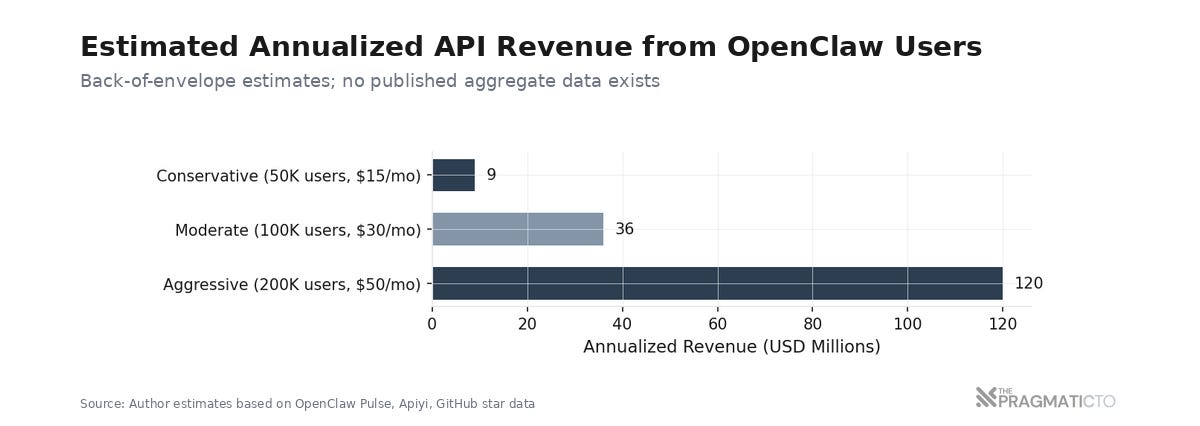

The aggregate revenue implications of this default are significant, even using conservative assumptions. OpenClaw crossed 180,000 GitHub stars and had 1.5 million agents created by early February 2026. GitHub stars-to-active-user conversion for developer tools typically runs between 10% and 30%, which suggests an active user base somewhere between 50,000 and 200,000 people. Multiply by the documented average monthly API spend of $15 to $50 per user, and the back-of-envelope math produces annualized figures of $9 million at the conservative end, $36 million at the moderate estimate, and $120 million at the aggressive end; the majority flowing to Anthropic.

These are rough numbers, and no published aggregate data exists for OpenClaw API spend. But even the conservative figure of $9 million annually represents a non-trivial revenue stream; OpenAI's API business hit $1 billion ARR in late 2025, while Anthropic targets $26 billion in revenue by the end of 2026. A single agent platform driving $36 million or more in annual API spend to a competitor is the kind of leak that a company projecting $14 billion in losses for 2026 cannot afford to ignore.

Anthropic's Gift to OpenAI

The irony of this acquisition sharpens when you trace the timeline of Anthropic's own decisions.

Steinberger built Clawdbot in November 2025---named after Claude, built for Claude, defaulting to Claude, driving every token of its explosive growth directly into Anthropic's API revenue. Within weeks the project became the fastest-growing open-source repository in GitHub history, crossing 180,000 stars in roughly sixty days and generating the kind of organic developer evangelism that no marketing budget can buy. Steinberger was losing $10,000 to $20,000 per month running OpenClaw, and the vast majority of that cost was API spend; infrastructure costs ran only $10-25 per month for the servers themselves. He was subsidizing Anthropic's revenue out of his own pocket while building their most powerful distribution channel.

Anthropic's response to this gift was to send lawyers.

On January 27, 2026, Anthropic issued a trademark cease-and-desist over "Clawd" being too phonetically similar to "Claude." Steinberger renamed the project to Moltbot, then to OpenClaw within two days; the Hacker News community called it an "Anthropic fumble" that damaged the company's reputation in the open-source community while handing OpenClaw a fresh wave of viral attention through the drama. One analyst captured the absurdity precisely: "The OpenClaw creator built this project for Claude, named it after Claude, and was actively driving revenue and developer mindshare to Anthropic's API. Instead of recognizing what they had---an unpaid evangelist building the most viral agent ecosystem in history on top of their model---Anthropic sent lawyers."

Eighteen days later, OpenAI swooped in and acqui-hired Steinberger. The Monday Morning Substack called it a potential "fumble of the century for Anthropic," noting that Anthropic's enterprise market share had grown to 40% while OpenAI declined to 27%---a shift partially driven by developer tools and agent ecosystems running on Claude. Anthropic was winning the developer distribution war through organic adoption; then it chose to antagonize the single person doing more for that adoption than anyone on its payroll.

The Distribution Channel Thesis

The conventional reading of this acquisition focuses on three assets: Steinberger's talent, his architectural knowledge of agent systems, and the community goodwill attached to OpenClaw. All three are real and valuable; none of them explain the speed of the move, the personal involvement of Altman, or the competitive urgency of bidding against Mark Zuckerberg's direct outreach via WhatsApp.

A distribution channel thesis does.

By bringing Steinberger in-house, OpenAI can shift the default model hierarchy in whatever agent products emerge from his work---and defaults, as every CTO who has watched browser search engine deals knows, drive the overwhelming majority of usage. OpenAI captures a proven demand generation channel; OpenClaw demonstrated that autonomous agents create enormous, persistent, recurring API demand that dwarfs anything a chatbot produces. A ChatGPT user might generate a few thousand tokens per conversation; an OpenClaw agent running 24/7 with heartbeats, cron jobs, and multi-step workflows generates 5 to 200 million tokens per month. If even 100,000 users run agents on OpenAI models at those consumption rates, the resulting 500 billion to 20 trillion tokens per month would represent a significant fraction of OpenAI's total API throughput---which currently stands at 6 billion tokens per minute.

The move also denies Anthropic its most effective unpaid distribution partner at a moment when distribution matters as much as model quality; it locks Steinberger's architectural thinking into OpenAI's agent-native infrastructure---the Agents SDK, the Responses API, the Frontier Platform---at a time when 93% of companies processing more than one trillion tokens on OpenAI already use framework-based agent orchestration.

No insider has confirmed that token economics or revenue redirection played a role in the acquisition decision; his is purely my analysis based on the substantial yet circunstantial evidence. The strongest version of this thesis is not that OpenAI was protecting its own revenue--- it's that OpenAI was capturing a revenue channel that was primarily benefiting a competitor, at a moment when both companies are burning billions to establish market dominance.

Agents Are the New Apps

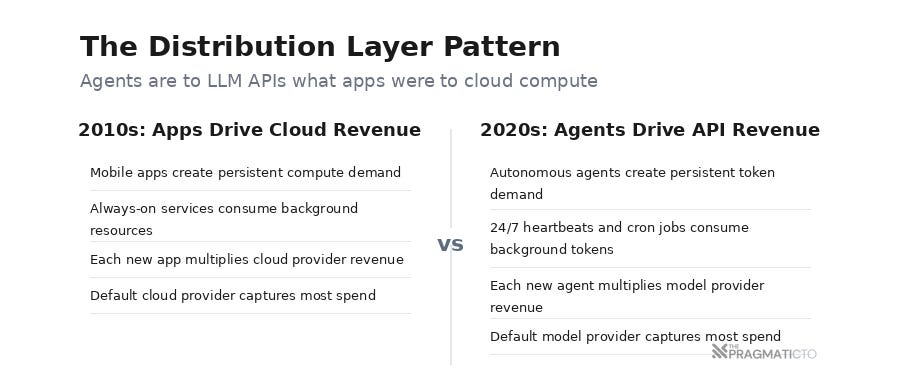

The OpenClaw acquisition fits a broader pattern that I believe will define the economics of AI infrastructure for the next three to five years: autonomous agents are becoming the distribution layer that drives model provider revenue, in exactly the way that mobile apps became the distribution layer that drove cloud compute revenue.

The structural parallel is almost exact. In the 2010s, mobile apps created persistent compute demand---always-on services running in the background, pushing notifications, syncing data, processing transactions---that drove AWS, GCP, and Azure revenue far beyond what web applications alone would have generated. SaaS products did the same for payment processing; every recurring subscription flowing through Stripe created persistent transaction volume that compounded as the ecosystem grew. Autonomous agents are now doing this for LLM APIs; an agent running 24/7 with periodic heartbeats, automated workflows, and multi-step reasoning creates persistent token demand that compounds with every new user, every new integration, every new automated task.

The scale of the opportunity explains the urgency. The total LLM API market processes approximately 50 trillion tokens per day, with code generation and agent workflows accounting for 40-50% of that volume. OpenAI's token throughput has grown 700% year over year; agentic inference is the fastest-growing usage pattern across every major API provider. Whoever controls the agent layer---the platforms where autonomous workflows are designed, deployed, and defaulted to specific models---controls the revenue that flows from them.

Default model settings in agent platforms are becoming the new default search engine deals. Google paid Apple billions annually to remain Safari's default search engine because defaults drive behavior at scale; the economics of agent platform defaults follow the same logic, with token revenue replacing advertising revenue as the prize.

What This Means for Your Budget

If you're running or evaluating agent infrastructure, the token economics of this acquisition carry practical implications that most planning processes have not caught up with.

The first is that your agent platform's default model is not a neutral technical choice---it's a revenue channel decision for someone else. Every token your autonomous agents consume is revenue for a model provider; the provider your platform defaults to captures the vast majority of that spend because most users never change defaults. When you evaluate agent platforms, understanding the default provider hierarchy is as important as understanding the capability benchmarks; the platform's incentives shape which models your agents will call, how aggressively context is managed, and whether token efficiency is a design priority or an afterthought.

The second is that the cost structure of autonomous agents bears almost no resemblance to the cost structure of chatbot-era AI tools. A developer using GitHub Copilot generates predictable, bounded API costs that correlate with working hours. A fleet of autonomous agents running 24/7 with heartbeat checks, persistent memory, and multi-step workflows generates costs that correlate with uptime; uptime is 168 hours per week regardless of whether any productive work is happening. The heartbeat problem alone can cost $250 per week per agent at Opus pricing; multiply that across a team of twenty agents and you're spending $260,000 annually on status pings. Most AI budgets were built for the chatbot era and have not been recalibrated for always-on autonomous systems.

The third is that vendor lock-in through agent defaults is the new lock-in vector that most CTOs are not even aware of. Once your workflows, persistent memory, integration configurations, and skill marketplace dependencies are built on a specific agent platform with a specific model default, switching costs compound rapidly. This is not the familiar lock-in of cloud provider APIs or database engines; it's lock-in through the accumulated context and behavioral tuning of autonomous systems that learn and adapt over time. The switching cost isn't technical migration alone---it's the loss of institutional memory that your agents have built over months of operation.

What I'm Watching For

Four signals over the next six to twelve months will determine whether the distribution channel thesis holds.

The most telling will be whether OpenClaw's default model hierarchy shifts from Anthropic to OpenAI. The project is moving to an independent foundation, but if the defaults change within the first two releases after the transition, the revenue redirection motive becomes difficult to argue against; a subtler version of the same signal would be OpenAI offering preferential API pricing or free tiers specifically for OpenClaw users---a subsidy that looks like community support but functions as customer acquisition for API revenue.

The second signal is whether Steinberger's first projects at OpenAI resemble "OpenClaw for GPT"---consumer-facing autonomous agents built on OpenAI's infrastructure that inherit the design patterns and community goodwill of the project he built. If the agent architecture he designed to drive Claude usage gets rebuilt to drive GPT usage, the capture is complete.

The third is Anthropic's response; if Anthropic acquires or deeply partners with another agent platform within the next six months, it validates that they recognize the distribution channel they lost. Silence would suggest they either disagree with this framing or haven't yet grasped what happened.

The fourth is broader: whether agent platform defaults become a negotiation point in enterprise API contracts the way search engine defaults became negotiation points in browser contracts. If model providers start paying agent platform developers for default placement, the parallel to search engine economics will be fully realized---and the OpenClaw acquisition will look less like an acqui-hire and more like the opening move in a distribution war.

Questions Worth Asking

When you evaluate an agent platform, do you trace where your API spend goes? Not the total cost---the destination. Do you know which model provider benefits most from your agent infrastructure, and whether that alignment was a deliberate choice or an inherited default?

Have you budgeted for the token economics of autonomous agents, or are you still forecasting based on chatbot-era usage patterns? The difference between a developer using an AI coding assistant and a fleet of agents running 24/7 is not 2x or 5x---it's 100x to 1,000x in token consumption, and it scales with uptime rather than headcount.

If your agent platform's default model changed tomorrow, would you notice? Would your team? Would your finance team?

The acqui-hire headlines have moved on. The token economics haven't. And if I'm right that agents are becoming the distribution layer for model provider revenue, then understanding who controls your agent defaults is no longer a technical question. It's a financial one.